Explore insights by category

Data and analytics | Finances and payments | Mental health | Patient and member engagement | Pharmacy care | Pharmacy management | Technology and automation | Value-based care | View all

Latest featured insights

Article

Learn how health plans can fill care gaps by offering greater support and personalized solutions for women experiencing menopause.

![A Quarter-Century of Pharmacy Benefit Changes [E-Book]](/content/dam/optum-dam/images/business/pharmacy/hand-with-vial-1080x720.jpg)

E-book

Explore the dramatic changes in the years since 2000 — in drug performance and design, and especially affordability.

Article

Explore how evidence-based tools like The ASAM Criteria® Navigator and InterQual® Substance Use Disorders Criteria are helping payers and providers efficiently and effectively navigate the complex substance use disorder landscape today.

![Automation Strategies for Empowering Staff and Patients [Webinar]](/content/dam/o4-dam/images/professionals/company-meeting-1080x720.jpg)

On-demand webinar

Learn how to help transform hospital revenue cycle operations with healthcare automation.

Data and analytics

Article

Understand how routine clinical practice impacts information captured in real-world data (RWD).

On-demand webinar

Hear from experts in this Endpoints News webinar on the increasing importance of clinicogenomic data, including diverse phenotypic and genotypic profiles.

Video

Optum Life Sciences leaders break down common missteps when using RWD and how to create practical strategies to overcome them. Watch the video from STAT Summit.

Article

Providers collect data about patients every day. But what should they be considering — and doing — to put their clinical data to good use?

Finances and payments

Article

Discover 4 ways health plans can save money today — and in the future — by engaging with a digital claim payment partner.

Article

Learn how AI is changing the fraud case review process.

Article

The connection between money and health runs deeper than just costs — your employees rely on your benefits strategy for overall wellness.

Article

Learn how payment delivery optimization can improve the healthcare financial cycle and bridge the gap between payers and providers.

Mental health

![Leveraging GLP-1s Within a Whole-Person Approach [Webinar]](/content/dam/optum-dam/images/business/insights/plus-size-woman-smiling-1080x720.jpg)

On-demand webinar

A holistic, strategic approach to GLP-1s combines medication, surgery and behavioral health to support weight management and reduce costs.

Article

Learn more about the current research on mobile crisis teams (MCTs), how to build an MCT as well as envision a future for them.

Article

Recognizing the unique perspectives of neurodivergent individuals and creating an inclusive workplace can enrich and empower your teams.

![Helping Members Find In-the-Moment Support [Video]](/content/dam/optum-dam/resources/videos/business/ews-member-testimonial-video/ews-member-testimonial-video-thumbnail-1080x720.jpg)

Video

See how one mother found in-the-moment support during a family crisis.

Patient and member engagement

Article

Learn how Optum Serve is helping to advance the health and well-being of Veterans and spouses in the trucking and cybersecurity workforce.

Guide

Learn how Optum Serve helped Veterans achieve health and wellness goals, promoted well-being, prevented illness and reduced the burden of chronic conditions.

White paper

Learn how Optum Serve supports Veterans through a whole-health approach to work and life.

Infographic

Find out what we learned when we asked more than 1,000 people how they feel about the pre-service journey, from provider search to AI use.

Pharmacy care

Article

On average, Optum Infusion nurses spend approximately 1,300 hours per year providing care to patients. Hilary, an Optum Infusion nurse, knows this firsthand.

![Trusted Specialty Pharmacy Support [Video]](/content/dam/optum-dam/resources/videos/business/specialty-awareness-provider/specialty-awareness-provider.jpg)

Video

Optum Specialty Pharmacy simplifies prior authorizations, offers patient support and helps you save time with digital tools.

Video

Our infusion care ecosystem is based on a commitment to clinical innovation and providing consistent, convenient and compassionate care.

Article

In today’s healthcare landscape, the introduction and increasing use of biosimilars offers a promising solution to rising medication costs.

Pharmacy management

Article

In this conversation, get a peek into upcoming solutions and technologies designed to address high-cost medications.

Article

There are many misconceptions of pharmacy benefit managers (PBMs) and their services. Here are the facts.

Article

New pills and expanded indications are poised to alter the dynamics of GLP-1 costs and treatments.

White paper

Evolving federal policy on popular weight loss medications introduces additional cost and coverage uncertainties.

Technology and automations

Case study

Using digital integration to ease administrative burdens can help you spend more time with patients and deliver positive patient outcomes.

White paper

Read the white paper for strategies on controlling fixed costs, efficiency and organizational flexibility.

Case study

See how Lima Memorial improved efficiency with advanced technology.

On-demand webinar

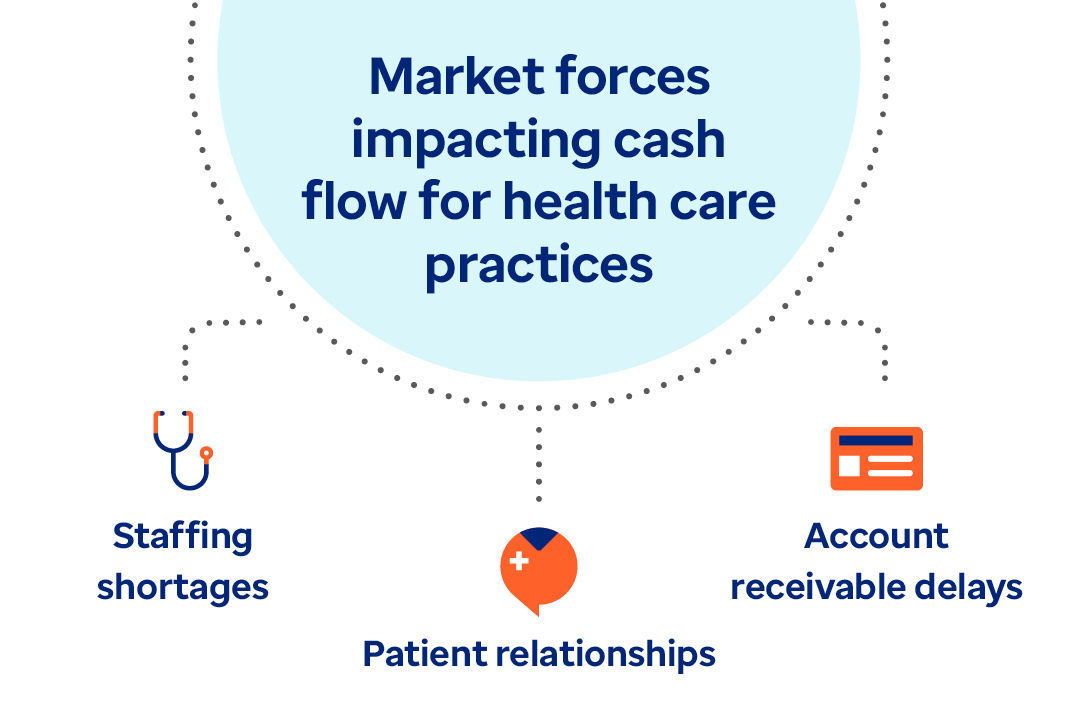

Watch the webinar to learn actionable strategies on tackling decreased hospital margins, increased labor costs and staffing shortages.

Value-based care

Article

Our holistic approach can help payers better fight against rising musculoskeletal care costs while better serving members.

Article

Learn how employers can bridge the wellness gap through workplace wellness programs that offer personalized, practical and human support.

Article

Digital MSK can improve outcomes while reducing costs. Learn how payers and employers can choose a program that delivers on health benefits and savings.

Article

Gen Z is changing workplace expectations — employers must evolve benefits to keep up..